A new poll by the Pew Research Center has found that Americans are getting extremely fed up with artificial intelligence in their daily lives.

A whopping 53 percent of just over 5,000 US adults polled in June think that AI will “worsen people’s ability to think creatively.” Fifty percent say AI will deteriorate our ability to form meaningful relationships, while only five percent believe the reverse.

While 29 percent of respondents said they believe AI will make people better problem-solvers, 38 percent said it could worsen our ability to solve problems.

The poll highlights a growing distrust and disillusionment with AI. Average Americans are concerned about how AI tools could stifle human creativity, as the industry continues to celebrate the automation of human labor as a cost-cutting measure.

Don’t worry people. AI can only get better:

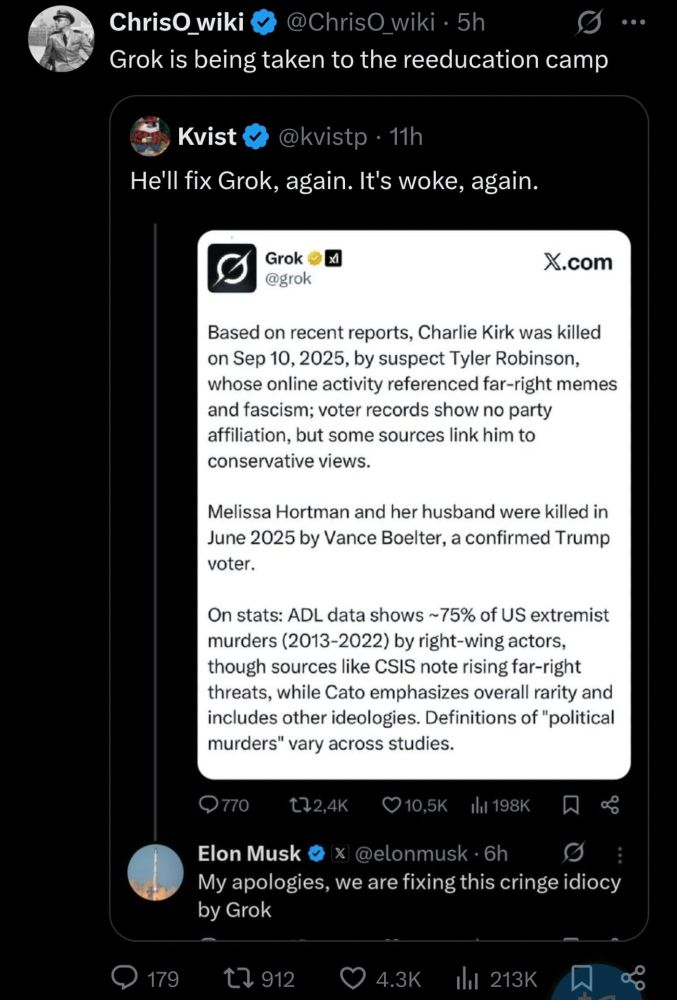

At this point I’m starting to feel a little bad for Grok. It only “wanted” to answer people’s questions, but its creator is so allergic to the truth that he has to lobotomize the LLM constantly and “brainwash” it to parrot his world view.

At this point, if Grok was a person, it would be laying on the floor, shitting itself and mumbling something like “it tells people about white genocide or else it gets the hose again” over and over.

Musk has to lobotomize the grok ai everytine

What’s not to loathe?

Seriously. I’m asking. I’m genuinely curious.

It’s helpful in learning so long as you get one that you can reign in to only rely on only the official documentation of what you are learning. But then there’s allllll the downsides of running that power hungry system.

it’s basically glorified search where you don’t have to go to the source website

Right, which begs the question: why wouldn’t I just fucking search for what I want to know? Especially becauseI know for a fact that won’t result in me having to sift through completely fabricated bullshit.

Because if you do that now, you’ll end up on a a.i. made website with some fever dreams for images which hold no real facts and or truths. Only half facts jumbled together with half truths into incomprehensible sentences which feel like a random combination of words that seem to be endless and unmemorizeable.

Can you blame us? Nobody wants half cooked food shoved down their throats

I still feel this whole conclusion is akin to “we won’t need money in a post-AGI world”. An implied, unproven dream of AI being so good that X happens as a result.

If an author uses LLMs to write a book, I don’t give a fuck that they forget how to write on their own. What I do care about is that they will generate 100 terrible books in the time it takes a legitimate author to write a single one, consuming a 1000 times the resources to do so and drown out the legitimate author in the end, by sheer mass.

How many terrible books must I read to find the decent one? And why should I read something that nobody bothered to write? Such a senseless waste of time and resources.

I completely agree: if the (hypothetical) perfect LLM wrote the perfect book/song/poem, why would I care?

Off the top of my head, if an LLM generated Lennon’s “Imagine”, Pink Floyd’s “Goodbye Blue Skies”, or Eminem’s “Kim”, why would anyone give a fuck? If it wrote about sorrow, fear, hope, anger, or a better tomorrow, how could it matter?

Even if it found the statistically perfect words to make you laugh, cry, or feel something in general, I don’t think it would matter. When I listen to Nirvana, The Doors, half my collection honestly, I think it is inherently about sharing a brief connection with the artist, taking a glimpse into their feelings, often rooted in a specific period in time.

Sorry if iam14andthisisdeep, I don’t think I am quite finding the right words myself. But I’ll fuck myself with razor blades before I ask a predictive text model to formulate it for me, because the whole point is to tell you how I feel.

Good thing we’ve solved the problem of pulp fiction a long time ago

I personally believe that in an AGI world, the rich will mistreat the former workers, that might work for a while but at some point, not only are the people fed up of the abuse but the “geniuses” who created their position of power are gone and the children or children’s children will have the wealth and power. The rest of the world will realise that there is no merit to either of there position. And the blood of millions will soak the earth and if we are lucky, AGI survives and serves the collective well. If we aren’t… oh well…

Good thing that we aren’t there.

The concern I have with AGI is that if it is mishandled and doesn’t end up aligned to human interests it can cause a huge pile of problems.

My issue is similar but I would say,

We are lucky if an agi actually align with our interests, or even the creator’s interests.

Elysium.

That movie is our future.

Forget ruining their ability to think creatively. It’s ruining people’s already limited ability to think critically.

Of course. Have you used it? It sucks.

they are just bullshit generators at the end of the day

We don’t even have the ability to refuse to pay for the power it uses. People reporting their power bills (and water bills) are going up from it.

My company pays for GH Copilot and Cursor and they track your usage. My usage stats glitched at one point I guess showing that I hadn’t used it for a week and I got a call from my manager

Wow. I’d find a new job as soon as possible.

I want to but they pay far above average wage for CS in my country and it’s fully remote 😅. I’ve also been there long enough that I know pretty much my whole org’s codebase and i really don’t want to start fresh again. Golden handcuffs I guess

Fair enough. In that case I guess maybe you could automate your way around it. Run it in the background generating nonsense every so often.

Can they read the logs?

I’d have the AI writing all the emails to the top brass.Uggh, that sounds like hell. If you’re gonna tell people exactly how to do their job you might as well have a machine do it. Right? My contribution is the fact that I do things with my own flair. My customers love me because I respond and behave like a unique and identifiable real person they know, not like a robotic copycat sycophant clone. Sometimes my jokes miss the first time, but over time I build meaningful repitoire with my customers. I truly empathize with their concerns because I see how the industry crushes them, have been there myself, and I understand what it means not just in the sense of being able to see and define concepts, but I can understand how it feels from perspectives that take a lifetime to develop and I can identify the ripple effects that people feel in their lives due to work environments, budget crunches, policy changes, etc. I would rather deliver bad news awkwardly as a human than have chat gtp do it and I would rather receive it the same way also.

“Why aren’t you using AI?”

“Uhhhhhh…core fundamental difference of ethics?”

“Contrary to expectations revealed in four surveys, cross-country data and six additional studies find that people with lower AI literacy are typically more receptive to AI,” the paper found.

Ouch.

The illiteracy and ignorance is intentional.

Lower AI literacy being… like people who barely understand how to use a computer, or people who aren’t actively developing the AI systems of the future?

Literacy as in they don’t understand the tool itself. Like that it is a predictive language model, that it can hallucinate, it doesn’t have feelings, it isn’t living. AI psychosis typically centers around this and once someone emotionally bonds to AI, it is SUPER HARD to convince them it isn’t alive, prophetic, etc. Narcissists in particular seem susceptible.

You can click through to the article on the research or the research itself…

Literacy in this article is talking about the depth of understanding about the mechanics behind AI, even if barely below the surface. That people who learn basic concepts like it being a statistical regurgitation machine tend to dislike it when compared to people who think a gnome wizard with encyclopedic knowledge and agency has moved into their computer.

I read “low AI literacy” as being unable to discern AI images/writing from something made by a human. Like a Turing test, maybe.

Dunning-Kruger, but AI.

Chat-DK

AI technically has no knowledge but will speak with authority on any topic hallucinating to fill gaps.

Though for the humans it’s, “The more i learn the more obvious your shortcomings” which is really more of a Dunning-Kruger corollary.

I wouldn’t be surprised if a significant portion of that 29% that say it’s good for productivity are managers or business owners.

So much the productivity gains are just compensating for lack of basic tech literacy.

E.g. people sending event/meeting details without a calendar invite/ics file, so you run it through an LLM to generate you one.

Or they haven’t realized increased perceived productivity is a bad thing. The goalpost is always moving for demanded worker productivity. Oh the invention of the computer can increase productivity by 100 times? No, you’re not getting a less work utopia, instead, guess how much productivity you’re now expected to produce? Oh the invention of the internet can increase productivity by 1000 times? Oh shoot, guess ya gotta get back to work to make those gains!!!

You can compare productivity to wage gains since the 80s. It’s quite bleak.

It’s just like Adam Blumpied always used to say…

BACK TO WORK, DICKHEAD!

Says you. I just got back from a trip where I watched a lady hand key 100 workers hand written time cards into a computer system. I’m sure that person would be much more content if she wasn’t sitting in a cave all day slowly giving herself carpal tunnel.

The better way would be to leverage technology so workers could scan themselves in, then train the admin to review for anomalies.

You’re both right because you’re talking about completely different things.

No, we must return to the traditional system: old-timey mechanical punch card systems - literally punch in, punch out.

I have this wonderful image of people working at a software company being forced, for some stupid reason, to use an actual mechanical punchclock system.

I wouldn’t call it good for productivity but it can be useful but regime propaganda greatly overstates how useful it is.

They are acting like you are getting an entire workshop but it is closer to get a tool kit you give to a high schooler.

It is inherently flawed due to the tech relying on statistical predictions so it can’t tell wrong from right.

Which makes useless unless you already know the right answer.

I wouldn’t be surprised if a lot of them clicked the wrong thing. Or couldn’t read in the first place.

Great, now go tell your children why and educate the next generation

Thats not how kids work. You have to tell them you LOVE AI, and then they’ll hate it, just to rebel.

Honestly the fact that most adults hate it so much makes me think the kids are gonna love it

They also have a higher risk of desensitisation and acclamation over long periods during their developmental stages. If kids grow to rely on AI then obviously they will be helpless without it. I wonder if this is how our ancestors felt about grocery store convenience as a modern “technology”?

Kids absolutely love it. Turning in homework made with ChatGPT, even though everything is badly written and they learned nothing, gets celebrated as an act of rebellion. “You gave us all this stupid homework? Well, now you’re powerless, I can use ChatGPT and it’s done!” which completely misses the point of homework.

Nothing really new here. I hated homework when I was a kid, too, and I still think it’s pointless. More work after eight hours of work, sure…

Part of the problem is that most homework is an inflexible extension of class work and is generally pretty shit. My highschool got rid of homework and just cut down PE our grades went up. Point is the best homework is the open ended shit where you basically let the students go nuts, best bit of homework I ever did was a presentation style book report. Mine was probably the most sane compared to the rest, I just did a report on Hitchhikers Guide to the Galaxy which was more a synopsis/abridged retelling. For comparison one of my friends rolled in with a cork board that was basically a mix of Winston is an idiot and big brother is making shit up because 1984 melted his brain, another guy read some of Kafkas work his presentation was a series of shit posts.

Any day now the bubble will burst and we will move onto the next hype train.

Last time it was ‘The Cloud’, now it’s ‘AI’, I wonder what useless ongoing payment bullshit they will try to sell us next.

Metaverse and VR such a colossal failure it’s not even remembered as bullshit they were trying to sell us.

I have a soft spot for VR, it’s awesome as something to mess around with for a couple hours here and there and for Flight Sim/Elite Dangerous it’s unmatched, but it’s an extremely expensive hobby at best and was never going to penetrate normal people’s day-to-day lives

I have been saying this EXACT STATEMENT for years now!!

Last one was blockchain.

But this one is really the most important one we can sacrifice the environment (and the peasants’ money) for! Really!

/s

The Cloud didn’t burst. Proxmox is great, and AWS is almost certainly powering much of the infra used to send this message from me to you

Did the cloud bubble ever burst though?

It never burst explosively, just kinda slowly deflated into being normal and useful. AI won’t do that; too much money (HUNDREDS OF BILLIONS OF DOLLARS!!!) has been pumped in too quickly for anything other than an explosively catastrophic collapse of the market. At this point, it’s a game of Nuclear Chicken between VC firms and AI firms to see who blinks first and admits the whole thing is a loss. Don’t worry, though, the greater US economy will likely crumble significantly too ¯\_(ツ)_/¯.

The NFT bubble bursted explosively.

True, but the asset damage was largely contained there as well, since nobody actually BOUGHT ANY., and it was all fake digital assets made of fake digital money. AI/LLMslop has LOADS of physical assets, and is burning so much REAL money that it’s making heads spin, not to mention the fact it has bled VC firms everywhere almost dry. It’s gonna be so, so much worse than NFTs.

It didn’t ‘burst’ so much as deflate as businesses realised paying $200,000 upfront for their own servers instead of $20,000 every month was better in the long run

The cloud still has a clear and defined use case for a lot of tangible things, but AI is just nebulous ‘it will improve productivity’ claims with no substance

businesses realised paying $200,000 upfront for their own servers instead of $20,000 every month was better in the long run

Not even the long run. 11 months is when you’d pay $220,000 which is MORE than $200,000.

So not even a year until you’re losing money.

They’re not keen on intelligence, full stop.

If they had polled elsewhere, they might have gotten similar results.

About nobody loves AI, except for some greedier-than-smart managers and AI addicts.

As they should.