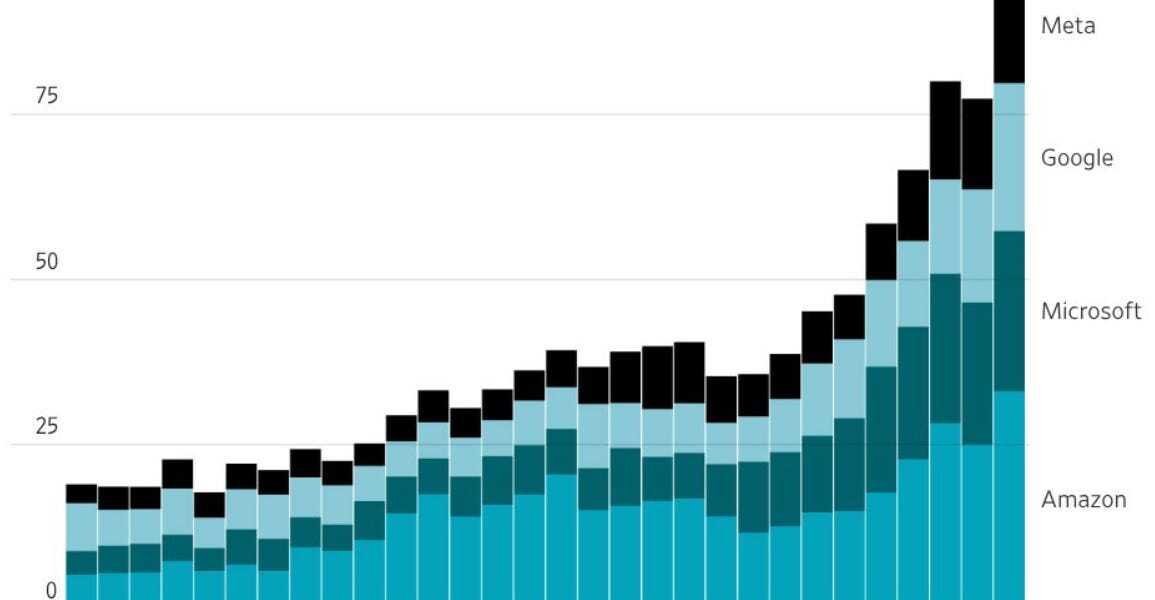

The US economy is splitting in two. There’s a rip-roaring AI economy. And there’s a lackluster consumer economy. Last quarter, spending on AI outpaced the growth in consumer spending.

Yet, the whole ‘AI economy’ is itself a house of cards. Companies are losing hundreds of billions and all gains are in speculative valuation. The only winner here is Nvidia, and all it does is just flip Taiwan’s chips to cash-burning companies.

The study on how AI assistants interact with programmers definitely matches my experience. I’m actually significantly faster with it off, but I feel slower.

It’s like crack, it can put the dumb idea you had in your head and didn’t write on the screen in seconds and you can spend 10 minutes following it down that hole only to delete everything and be back where you started.

This isn’t productive, but it feels productive because there’s tons of code being written.

I feel like it’s that way for every application, but SWE in particular hits the wall faster because there’s not really a lot of slack on software, and once you hit code review and testing the issues become obvious.

A teacher using AI for lesson plans and just letting it slip in lies without checking is more nefarious because now we have the possibility of not seeing the effect of that for a decade.

A teacher using AI for lesson plans and just letting it slip in lies without checking is more nefarious because now we have the possibility of not seeing the effect of that for a decade.

My kid got a social studies review sheet that said that Mount Everest is in the city of Nepal. And yes, we know that teacher uses AI for their lesson plans.

My kid got a social studies review sheet that said that Mount Everest is in the city of Nepal. And yes, we know that teacher uses AI for their lesson plans.

It used to be so exciting to find out that the answer in the back of the book was wrong and you were right the whole time…

Personally, I’ve had a pretty positive experience with the coding assistants, but I had to spend some time to develop intuition for the types of tasks they’re likely to do well. Like if you need to crap out a UI based on a JSON payload, make a service call, add a server endpoint, LLMs will typically do this correctly in one shot. These are common operations that are easily extrapolated from their training data. Where they tend to fail are tasks like business logic which have specific requirements that aren’t easily generalized.

I’ve also found that writing the scaffolding for the code yourself really helps focus the agent. I’ll typically add stubs for the functions I want, and create overall code structure, then have the agent fill the blanks. I’ve found this is a really effective approach for preventing the agent from going off into the weeds.

I also find that if it doesn’t get things right on the first shot, the chances are it’s not going to fix the underlying problems. It tends to just add kludges on top to address the problems you tell it about. If it didn’t get it mostly right at the start, then it’s better to just do it yourself.

All that said, I find enjoyment is an important aspect as well and shouldn’t be dismissed. If you’re less productive, but you enjoy the process more, then I see that as a net positive. If all LLMs accomplish is to make development more fun, that’s a good thing.

his has to be a bit, right?

Have you actually used these tools?

I find it helps to tell it I want very terse answers and to never respond with more than 1 or 2 steps at a time so I can tell it when it just crapped out a bunch of wrong info. That helps tremendously.

Definitely, I’d really love to see a visual tool like a scene graph where it could propose nodes and you could review and decide whether to add them or make changes.

The gloating of this article about its use in education and academia is very depressing tbh.

Look at my rate of profit, dog.